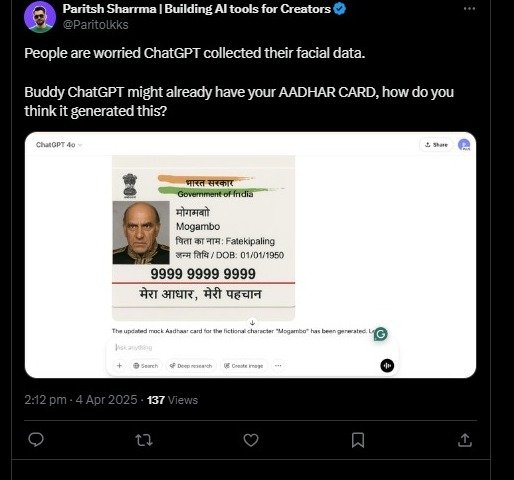

Graeter Noida (National Desk): OpenAI’s ChatGPT, which is famous for its cutting-edge language capability, is now embroiled in new controversies due to its technological advancements. Recently it has come to light that ChatGPT, whose capability has improved significantly, may now be able to create fake government identity documents such as Aadhaar cards and PAN cards. This new discovery has once again highlighted privacy and security issues and raised the question whether we are now entering a new era of cybercrimes.

With the development of ChatGPT, it has now become very easy to create fake documents based on simple instructions given by AI users. These documents are very accurate, which can enable any person to commit fraud. Recently, some users on social media reported that through ChatGPT they have created fake copies of Aadhaar and PAN cards. A user Yashwant Sai Palaghat expressed concern, saying, “ChatGPT is creating fake Aadhaar and PAN cards instantly, which is a serious security risk. This is why AI should be regulated to a certain extent.”

Photos of fake IDs posted on social media

Similarly, another user Piku said that he asked ChatGPT to create Aadhaar cards with just name, date of birth and address, and the AI created a very perfect replica. He also raised the question, “We keep talking about data privacy, but who is selling these Aadhaar and PAN card datasets to AI companies to create such models? Otherwise how can it know the format so accurately?” This question raises serious concerns whether this development of AI can cause a threat to our personal security and privacy.

Can the growing capabilities of AI promote cybercrime?

Although ChatGPT does not create documents using personal details, it is still capable of generating fake documents using publicly available information. This technological capability has posed a new threat where criminals can easily create fake documents and commit cyber fraud, identity theft, and other crimes. Moreover, this problem becomes even more serious as this technological advancement of ChatGPT is constantly increasing the possibility of people misusing it.

Can it become a serious security crisis?

The growing capability of ChatGPT and other AI models is definitely posing a major security threat. It can now be easier for cyber criminals to commit fraud by creating fake documents. Moreover, it can further increase the issues related to privacy and data security for AI models. If such types of technologies are not controlled, it can become a major threat not only to the security of personal information but also to the social and legal system.

What steps are being taken?

Keeping in mind this growing threat, experts and officials believe that strict laws and regulations are needed to control AI models. AI companies should ensure that the technologies they develop are only used for positive purposes and are not misused. Along with this, users also need to be made aware that it is very important to be responsible in the use of AI.

The growing potential of ChatGPT and other AI technologies is definitely becoming a security challenge. If it is not controlled, it can help in promoting cyber crimes and theft of personal information. Therefore, it is very important that the use of AI is properly monitored and controlled to ensure that this technological development is not misused.